Achievement

A team of researchers from Oak Ridge National Laboratory (ORNL), Sandia National Laboratories, a graduate student intern from Purdue University, and a professor at Purdue University developed a Bayesian approach for optimizing the hyperparameters of an algorithm for training binary communication networks that can be deployed to neuromorphic hardware. They showed that optimizing the hyperparameters on this algorithm can lead to significant improvement (by up to 15 percent) in designing accurate neuromorphic computing systems. This jump in performance continues to emphasize the potential when converting traditional neural networks to binary communication applicable to neuromorphic hardware.

Significance and Impact

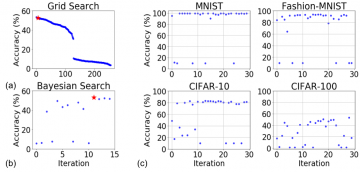

Training neural networks for neuromorphic deployment is non-trivial. There have been a variety of approaches proposed to adapt back-propagation or back-propagation-like algorithms appropriate for training. Considering that these networks often have very different performance characteristics than traditional neural networks, it is often unclear how to set either the network topology or the hyperparameters to achieve optimal performance. One such algorithm is Whetstone (developed at Sandia National Laboratories), which trains networks that have binary communication, and are amenable for mapping onto spiking neuromorphic hardware. This approach not only has all of the hyperparameters associated with traditional neural network or deep learning network training, but also additional hyperparameters of its own. These hyperparameters have a significant effect on the performance of the algorithm. The presented framework provides a way to automatically discover an optimal hyperparameters that drastically improves the performance of the network. For a simple case study, the researchers also showed that this hyperparameter optimization approach can discover similar optimal hyperparameter set as a grid search, but with far fewer evaluations and far less time.

Research Details

- The research team proposed a simple, effective, and generalizable hyperparameter optimization algorithm that can be applied to any type of spiking neuromorphic algorithm or architecture.

- They performed a sensitivity analysis on spiking neuromorphic system hyperparameters, discussing strategic role of some sets of hyperparameters on the system's final performance.

- They showed that only after 30 iterations of Bayesian-based hyperparameter optimization for a design search space size of almost 400 million hyperparameter combination, the technique is predicting the optimal hyperparameter set that increases the state-of-the-art accuracy by up to 15 percent.

Citation and DOI

Maryam Parsa, Catherine D. Schuman, Prasanna Date, Derek C. Rose, Bill Kay, J. Parker Mitchell, Steven R. Young (ORNL), Ryan Dellana, William Severa, Kaushik Roy, and Thomas E. Potok. “Hyperparameter Optimization in Binary Communication Networks for Neuromorphic Deployment.” International Joint Conference on Neural Networks (IJCNN) 2020.

Overview

In this work, the researchers introduced a Bayesian approach for optimizing the hyperparameters of an algorithm for training binary communication networks that can be deployed to neuromorphic hardware. They show that by optimizing the hyperparameters on this algorithm for each dataset, we can achieve improvements in accuracy over the previous state-of-the-art for this algorithm on each dataset (by up to 15 percent). This jump in performance continues to emphasize the potential when converting traditional neural networks to binary communication applicable to neuromorphic hardware.

Last Updated: January 14, 2021 - 8:52 pm