Achievement

A survey was conducted of the Exascale Computing Project’s Application Development (AD) and Software Technology (ST) projects to more thoroughly understand the current and planned usage of the Message Passing Interface (MPI) for exascale levels of computing.

Significance and Impact

Much of the community’s understanding of needs and plans for widely-used tools, such as MPI, are based on many individual interactions with MPI users, making it more anecdotal and not systematic. This paper is, to our knowledge, the first attempt to broadly characterize a community’s interest and plans as to their requirements for MPI under a common and well-defined set of questions. The results of this survey will help guide work in the ECP and the broader community.

Research Details

- A survey document was developed and distributed to all ECP AD and ST project leads.

- The survey asked a total of 64 questions, including project and application demographics, basic performance characterization, MPI usage patterns, MPI tools ecosystem, memory hierarchy, accelerators, resilience, use of other programming models, and use of MPI with threads. Responses were a combination of multiple choice and free response.

- The team analyzed and summarized the responses to all questions, broken down into AD and ST portions of the ECP.

Overview

The Exascale Computing Project (ECP) is currently the primary effort in the United States focused on developing “exascale” levels of computing capability. In order to obtain a more thorough understanding of how the projects under the ECP are using, and planning to use the Message Passing Interface (MPI), and help guide the work of our own project within the ECP, we created a survey. Of the 97 ECP projects active at the time the survey was distributed, we received 77 responses, 56 of which reported that their projects were using MPI. This paper reports the results of that survey for the benefit of the broader community of MPI developers.

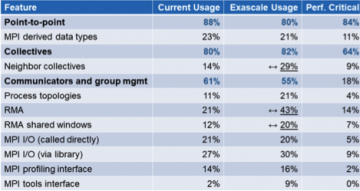

The first conclusion of the survey is the confirmation that MPI still has a major place in the exascale ecosystem, for both Application Development and Software Technology projects. Our survey also confirms that the capabilities of interest are by far point-to-point and collective communications, even if one-sided communications (RMA) are gaining interest in the context of exascale.

The survey also shows that accelerators have a critical place for ECP projects and it is interesting to see that a third of developers are expecting to call MPI for the GPU kernels, a feature that is still fairly novel for most MPI implementations. The survey also reveals that OpenMP is by far the preferred option for expressing the on-node parallelism, and developers are expecting to explicitly manage the memory hierarchy. To cope with failures, the preferred option is still checkpoint/restart. Finally, C++ is overtaking Fortran as the preferred language from which to call MPI. However, it is also important to notice that answers varied very much for a lot of the questions, leading to a complex exascale ecosystem with many languages, various MPI requirements both for the standard and the implementations.

Last Updated: May 28, 2020 - 4:04 pm