Achievement

Characterize and quantified time synchronization on leadership-class computers.

Significance and Impact

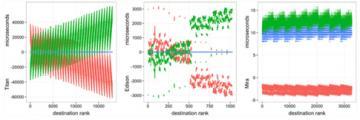

We present a detailed examination of time agreement characteristics for nodes within extreme-scale parallel computers. Using a software tool we introduce in this paper, we quantify attributes of clock skew among nodes in three representative high-performance computers sited at three national laboratories. Our measurements detail the statistical properties of time agreement among nodes and how time agreement drifts over typical application execution durations. We discuss the implications of our measurements, why the current state of the field is inadequate, and propose strategies to address observed shortcomings.

Research Details

- To our knowledge, ours is the first paper that presents a detailed statistical analysis of clock agreement on high-performance computer environments.

- We introduce collected results from a number of tests conducted on some of the world'smost powerful computers.We then analyze these results and how they might apply to different applications and scenarios.

- We discuss the implications of our measurements and why the current state of the field is inadequate, and we propose strategies to address observed shortcomings.

- At the outset of the work presented in this paper, we expected to find a much lower degree of uncertainty among node clocks in large-scale supercomputers than our results uncovered. We believe that the reason time synchronization has been neglected to this point is due to the way that supercomputers are used by the research community versus how they are provided as resources by supercomputing centers. System software researchers are possibly in the best position to be able to leverage the benefits of tight synchronization of node clocks but are unlikely to be in a position to affect a change in how a given supercomputer is run. On the other hand, the operations personnel who are responsible for maintaining supercomputer systems may not recognize the value of tightly synchronized node clocks or know the degree to which node clocks disagree, mistakingly believing that the temperature and power controlled environment in data centers along with the use of commodity protocols to synchronize time, such as NTP, is enough. Our aim with this paper is to highlight these ideas, supported by our measurements and findings, to the supercomputing community.

Overview

The trend towards increasing node counts in high-performance computing (HPC) is motivating amove toward greater levels of concurrency in HPC systems. Today's software environment is now being called on to produce new solutions for emerging issues including managing system power, resilience, and performance characteristics. The distributed algorithms that underlie such services operate much more efficiently in the presence of tightly synchronized clocks. For example, tightly synchronized clocks benefit well-known gang scheduling techniques and complex consensus algorithms. To illustrate the point, such time synchronization enables more aggressive assumptions about communication and synchronization patterns, the removal of unnecessary locks, and a wide range of other applications. Clock-based techniques are already frequently deployed in cloud and data center distributed systems for precisely these reasons.

We examined the time synchronization on some of the world's fastest and most powerful machines. These leadership-class systems employ high-end hardware connected by an extremely low-latency, low-jitter, interconnect in a carefully controlled environment, in contrast to widely distributed cloud-based systems based on commodity hardware and networks. Because of this, we assumed that these systems would have more stable, predictable hardware clocks, and close base time agreement using only standard time synchronization systems like Network Time Protocol (NTP). We did not believe that the complex hardware and software techniques used to provide time synchronization in wide-area systems would be necessary in leadership systems.

Our results demonstrate that the actual time uncertainty for leadership-class machines is often unexpectedly large, in some cases over 600 milliseconds despite network latencies of less than two microseconds. Building on this, we set out to thoroughly quantify the magnitude of the time synchronization challenge in leadership-class systems. This study shows that the current time protocol in use, NTP, is not suitable for providing the level of time synchronization necessary for important system software tasks such as coordinated scheduling. Based on this, we conclude that more complex time synchronization techniques are in fact needed to provide tight time synchronization in these systems, and we discuss the specific techniques most appropriate to HPC systems according to our findings.

Last Updated: May 28, 2020 - 4:04 pm