Project Status: Active

Project Summary: We propose to develop a scalable black-box training framework for scientific machine learning (SciML) models that are non-trainable with existing automatic differentiation-based algorithms. Our particular interest to this effort is to study how to train data-driven SciML models to learn missing physics of a complex system for advancing forward simulations. Specifically, this effort aims at achieving the following objectives: (1) develop a novel non-local gradient with structured sampling to enable non-local exploration for escaping from local minima and to achieve sufficient accuracy in gradient estimation; (2) advance theoretical analyses for a class of non-convex training problems to help domain scientists tune the hyper-parameters of the proposed training framework; and (3) exploit high-performance computing to accelerate the time to solution for black-box training problems for which loss functions involve computationally expensive black-box simulators. The proposed framework will be demonstrated on two distinct applications. The first is to train machine learning-based constitutive models to predict mercury dynamics in the Spallation Neutron Source mercury target, and the second is to train heat-source models to predict time-dependent laser scan paths that yield desirable micro-structures in three-dimensional printed metal components. This effort will advance the state of the art of several machine learning areas such as reinforcement learning and variational inference.

Principal Investigator: Guannan Zhang (CSMD, ORNL)

Senior Investigators: , Jiaxin Zhang (CSMD, ORNL), Hoang Tran (CSMD, ORNL), Dan Lu (CSED, ORNL), Matthew Bement (CSED, ORNL), Yousub Lee (CSED, ORNL), Bejamin Stump (MSTD, ORNL), Sirui Bi (CSED, ORNL)

Funding Period: Sept. 2020 to Aug. 2022

Publications:

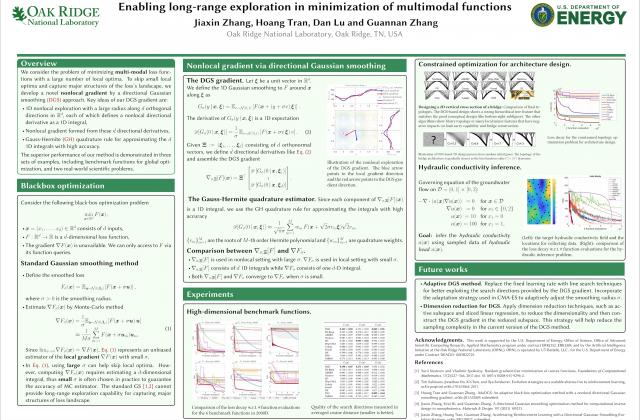

- H. Tran and G. Zhang, An adaptive nonlocal gradient descent method for high-dimensional black-box optimization, SIAM Journal on Scientific Computing, under review.

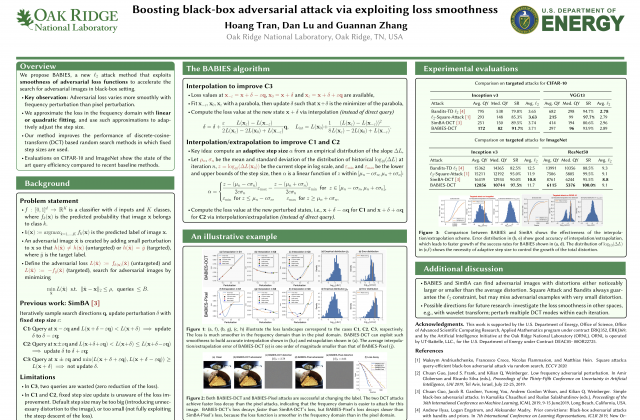

- H. Tran, D. Lu, and G. Zhang, Exploiting the local parabolic landscapes of adversarial losses to accelerate black-box adversarial attack, Proceedings of 17th European Conference on Computer Vision (ECCV 2022), pp. 317–334, 2022.

- M. Radaideh, H. Tran, L. Lin, H. Jiang, D. Winder, S. Gorti, G. Zhang, J. Mach, S. Cousineau, Model Calibration of the Liquid Mercury Spallation Target using Evolutionary Neural Networks and Sparse Polynomial Expansions, Nuclear Inst. and Methods in Physics Research B, 525(15), pp. 41-54, 2022.

- Sirui Bi, Benjamin Stump, Yousub Lee, John Coleman, Matt Bement, Guannan Zhang, Blackbox Optimization for High-fidelity Heat Transfer Calculations in Metal Additive Manufacturing, Results in Materials, 12, pp. 100258, 2022.

- Jiaxin. Zhang, Hoang. Tran, and Guannan. Zhang, Accelerating Reinforcement Learning with a Directional-Gaussian-Smoothing Evolution Strategy, Electronic Research Archive (doi:10.3934/era.2021075), 2021.

- Jiaxin. Zhang, Hoang. Tran, Dan. Lu, and Guannan. Zhang, Enabling long-range exploration in minimization of multimodal functions, Proceedings of 37th Conference on Uncertainty in Artificial Intelligence (UAI), 2021. (https://arxiv.org/abs/2002.03001)

- Hoang Tran, Dan Lu and Guannan Zhang, Boosting black-box adversarial attack via exploiting loss smoothness, Proceedings of ICLR Workshop on Security and Safety in Machine Learning Systems, 2021.

- Jiaxin Zhang, Sirui Bi, and Guannan Zhang, A directional Gaussian smoothing optimization method for computational inverse design in nanophotonics, Materials & Design, 197 (1), pp. 109213, 2021.

- Sirui Bi, Jiaxin Zhang and Guannan Zhang, Towards efficient uncertainty estimation in deep learning for robust energy prediction in materials chemistry, Proceedings of ICLR Workshop on Deep Learning for Simulation, 2021.

- Jiaxin Zhang, Sirui Bi, and Guannan Zhang, A nonlocal-gradient descent method for inverse design in nanophotonics, Proceedings of NeurIPS Workshop on Machine Learning for Engineering Modeling, Simulation and Design, Dec. 2020.

Activities:

- In October 2022, H. Tran presented our work on “Exploiting the local parabolic landscapes of adversarial losses to accelerate black-box adversarial attack” at the 17th European Conference on Computer Vision (ECCV 2022).

- In July 2022, G. Zhang presented our work on “a nonlocal gradient for high-dimensional black-box optimization” at the SIAM Annual Meeting.

- In April 2022, H.Tran presented our work on“a nonlocal gradient descent method for high-dimensional black-box optimization” at the SIAM conference on UQ.

- In July 2021, Sirui Bi gave a presentation on "A hybrid blackbox optimization for efficient calibration of heat conduction models in additive manufacturing" at the U.S. National Congress on Computational Mechanics.

- In July 2021, Guannan Zhang gave a presentation on "Enabling long-range exploration in minimization of multimodal functions" at the 37th Conference on Uncertainty in Artificial Intelligence (UAI 2021).

- In March 2021, Guannan Zhang gave a presentation on "A nonlocal gradient for high-dimensional blackbox optimization in scientific machine learning", at the SIAM Conference on CS&E.

- In May 2021, Hoang Tran gave a presentation on "Boosting black-box adversarial attack via exploiting loss smoothness" at the ICLR 2021 Workshop on Security and Safety in Machine Learning Systems.

- In December 2020, Sirui Bi gave a presentation on "Directional Gaussian Smoothing Optimization for Inverse Design in Nanophotonics" at The Conference on Machine Learning in Science and Engineering (MLSE 2020).

- In December 2020, Jiaxin Zhang gave a presentation on "A nonlocal-gradient descent method for inverse design in nanophotonics" at NeurIPS 2020 Workshop on Machine Learning for Engineering Modeling, Simulation and Design. [Download our poster] [A short video presentation]

Last Updated: November 29, 2022 - 12:45 pm