Achievement

We proposed a novel implicit residual neural network architecture with improved stability and robustness properties. We also described the regularization approach and the learning algorithm to efficiently train such networks.

Research Details

- proposed new network architecture

- developed regularization approach and learning algorithm

- created software implementing the proposed model

Overview

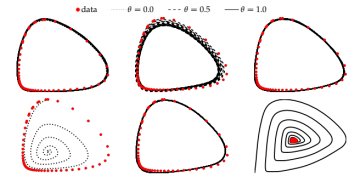

In this effort, we propose a new deep architecture utilizing residual blocks inspired by implicit discretization schemes. As opposed to the standard feed-forward networks, the outputs of the proposed implicit residual blocks are defined as the fixed points of the appropriately chosen nonlinear transformations. We show that this choice leads to the improved stability of both forward and backward propagations, has a favorable impact on the generalization power and allows to control the robustness of the network with only a few hyperparameters. In addition, the proposed reformulation of ResNet does not introduce new parameters and can potentially lead to a reduction in the number of required layers due to improved forward stability. Finally, we derive the memory-efficient training algorithm, propose a stochastic regularization technique and provide numerical results in support of our findings.

Citation

V. Reshniak and C. Webster. Robust learning with implicit residual networks. arXiv:1905.10479

Last Updated: January 14, 2021 - 10:56 am